R. Vertegaal, R. Slagter, G. van der Veer, and A. Nijholt. Eye gaze patterns in conversations: There is more to conversational agents than meets the eyes. In Proceedings of the SIGCHI conference on Human factors in computing systems, pages 301–308, Seattle, WA, USA, March 31-April 4 2001. Association for Computing Machinery. [pdf]

————

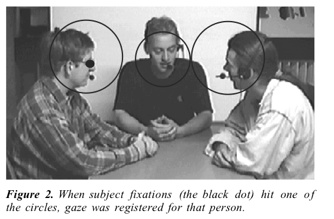

In multi-agent, multi-user environments, users as well as agents should have a means of establishing who is talking to whom. In this paper, we present an experiment aimed at evaluating whether gaze directional cues of users could be used for this purpose. Using an eye tracker, we measured subject gaze at the faces of conversational partners during four-person conversations. Results indicate that when someone is listening or speaking to individuals, there is indeed a high probability that the person looked at is the person listened (p=88%) or spoken to (p=77%). We conclude that gaze is an excellent predictor of conversational attention in multiparty conversations. As such, it may form a reliable source of input for conversational systems that need to establish whom the user is speaking or listening to. We implemented our findings in FRED, a multi-agent conversational system that uses eye input to gauge which agent the user is listening or speaking to.

This paper contains interesting references of eye-tracking studies showing that gaze is used a communication mechanism to: (1) give/obtain visual feedback; (2) communicate of conversational attention; (3) regulate arousal.

Two other interesting ideas that I found in the paper:

1- When preparing their utterances, speakers need to look away to avoid being distracted by visual imput (such as prolonged eye contact with a listener). [Argye and Cook, 1976]

2- If a pair of subjects was asked to plan a European holiday and there was a map of Europe in between them, the amount of gaze dropped from 77 percent to 6.4 percent [Argyle and Graham, 1977].

![]() Tags: eye-tracking, human communication, human computer interaction

Tags: eye-tracking, human communication, human computer interaction