I found this nice DIY tutorial on how Canon prototype their cameras.

I found this nice DIY tutorial on how Canon prototype their cameras.

Simplicity is about subtracting the obvious, and adding the meaningful.

John Maeda

My friend Joachim sent me a nice tag cloud which was generated by this service: Wordle.net. It is kind of cool how limited are the keywords which describe what I do. Thanks Joachim!

Mauro

A. I. Rudnicky. Mode preference in a simple data-retrieval task. In HLT ’93: Proceedings of the workshop on Human Language Technology, pages 364–369, Morristown, NJ, USA, 1993. Association for Computational Linguistics. [PDF]

———–

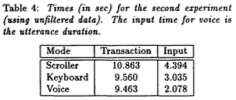

The study reported in this paper indicates that users show a preference for speech input despite its inadequacies in terms of classic measures of performance, such as time-to-completion. Subjects in this study based their choice of mode on attributes other than transaction time (quite possibly input time) and were willing to use speech input even if this meant spending a longer time on the task. This preference appears to persist and even increase with continuing use, suggesting that preference for speech cannot be attributed to short-term novelty effects.

This paper also sketches an analysis technique based on FSM (Finite State Machine) representations of human-computer interaction that permits rapid automatic processing of long event streams. The statistical properties of these event streams (as characterized by Markov chains) may provide insight into the types of information that users themselves compute in the course of developing satisfactory interaction strategies.

A. Graham, H. Garcia-Molina, A. Paepcke, and T. Winograd. Time as essence for photo browsing through personal digital libraries. In JCDL ’02: Proceedings of the 2nd ACM/IEEE-CS joint conference on Digital libraries, pages 326–335, New York, NY, USA, 2002. ACM. [PDF]

———-

This paper decribes PhotoBrowser, a prototype system for personal digital pictures that organize the content using the timestamps of each photo. The authors’ main assumption is that text annotations are great because are accessible to a variety of search and processing algorithms. Many systems exploits this possibility requiring time-intensive manual annotations (e.g., FotoFile [Kang and Shneiderman, 2000] and PhotoFinder Kuchinsky et al., 1999]).

The authors’ proposal is that of exploiting the timestamps at which the pictures have been taken. They propose an organizational, and visual, clustering of the pictures which divide the photos using longer periods of inactivities between one picture and the next.

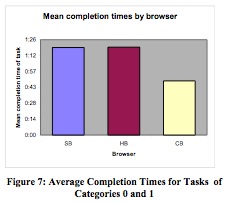

To verify their assumption, the authors tested the PhotoBrowser against other two applications. The first was a Hierarchical Browser, which organized the pictures at different levels corresponding to time at different granularity. The Scrollable Browser, organized the pictures into a single time-ordered list that could be scrolled.

The author asked 12 subjects to perform 6 retrieval tasks following different criteria. Their main result was that the browser that organized the pictures into temporal clusters enabled significantly faster completion times at an average of about 50 seconds.

These last months, I have been collaborating to a research project on Multimodal Information Retrieval of digital pictures collected through camera phones. Recently, one of the papers resuming the results of the research was presented at the International Workshop of Mobile Information Retrieval, held in conjunction with SIGIR in Singapore. Here goes the abstract and the URL to download the paper.

X. Anguera, N. Oliver, and M. Cherubini. Multimodal and mobile personal image retrieval: A user study. In K. L. Chan, editor, Prooceeding of the International Workshop on Mobile Information Retrieval (MobIR’08), pages 17–23, Singapore, 20-24 July 2008. [PDF]

Mobile phones have become multimedia devices. Therefore it is not uncommon to observe users capturing photos and videos on their mobile phones. As the amount of digital multimedia content expands, it becomes increasingly difficult to find specific images in the device. In this paper, we present our experience with MAMI, a mobile phone prototype that allows users to annotate and search for digital photos on their camera phone via speech input. MAMI is implemented as a mobile application that runs in real-time on the phone. Users can add speech annotations at the time of capturing photos or at a later time. Additional metadata is also stored with the photos, such as location, user identification, date and time of capture and image-based features. Users can search for photos in their personal repository by means of speech without the need of connectivity to a server. In this paper, we focus on our findings from a user study aimed at comparing the efficacy of the search and the ease-of-use and desirability of the MAMI prototype when compared to the standard image browser available on mobile phones today.

T. Tan, J. Chen, P. Mulhem, and M. Kankanhalli. Smartalbum: a multi-modal photo annotation system. In MULTIMEDIA ’02: Proceedings of the tenth ACM international conference on Multimedia, pages 87–88, New York, NY, USA, 2002. ACM. [PDF]

——-

Applications supporting annotation of pictures with voice: SmartAlbum (Tan et al., 2002) that unifies two indexing approaches, namely content-based and speech, Chen et al. (2001) proposed the use of a structural speech syntax to annotate photographs in four different fields, namely event, location, people, and date/time. Show&Tell (Srihari et al., 1999), which uses speech annotations to index and retrieve both personal and medical images, and FotoFile (Kuchinsky et al., 1999), which extends annotation to a more general multimedia object.

This demonstration presents a novel application (called SmartAlbum) for photo indexing and retrieval that unifies two different image indexing approaches. The system uses two modalities to extract information about a digital photograph; i.e. content-based and speech annotation for image description. The result is a powerful image retrieval tool that has capabilities beyond what current single-mode retrieval systems can offer. We show on a corpus of 1200 images the interest of our approach.

A. Girgensohn, J. Adcock, M. Cooper, J. Foote, and L. Wilcox. Simplifying the management of large photo collections. In Proceedings of INTERACT’03, pages 196–203. IOS Press, 2003. [PDF]

——–

This paper contains useful references on the fact that event segmentation of pictures was observed to be a valid criterion for organizing pictures The paper reports an algorithm that was compared with those of Graham at al. (2002), Platt et al. (2002), and Loui and Savakis (2000).

With digital still cameras, users can easily collect thousands of photos. Our goal is to make organizing and browsing photos simple and quick, while retaining scalability to large collections. To that end, we created a photo management application concentrating on areas that improve the overall experience without neglecting the mundane components of such an application. Our application automatically divides photos into meaningful events such as birthdays or trips. Several user interaction mechanisms enhance the user experience when organizing photos. Our application combines a light table for showing thumbnails of the entire photo collection with a tree view that supports navigating, sorting, and filtering photos by categories such as dates, events, people, and locations. A calendar view visualizes photos over time and allows for the quick assignment of dates to scanned photos. We fine-tuned our application by using it with large personal photo collections provided by several users.

R. Wasinger and A. Krüger. Modality preference – learning from users. In Proceedings of the User Experience Design for Pervasive Computing workshop, Munich, Germany, 11 May 2005. [PDF]

——-

This paper describes a qualitative comparison of input modalities for mobile applications. The author conducted a user study with about 50 users that were asked to fill questionnaires on a PDA. The questions targeted products that the users could find in a shop and therefore had to be answered while on the place. The users were divided in different groups and each group was exposed to different input modality: speech, handwriting, intra- and extra-gestures.

Interestingly, speech was reported as being the most comfortable modality to use in comparison with handwriting but also the modality that could mostly expose privacy. Users reported being uncomfortable speaking sensible information to their devices. Handwriting was see as conflicting with other manual activities that needed to be carried out while shopping.